Antoine Beaupr : Drowned my camera: dealing with liquid spills in electronics

...even the SD cards were wet!

...even the SD cards were wet!

The last photo my camera took before it died

The last photo my camera took before it died

Tada! Turns out you can dehydrate hardware too!

Tada! Turns out you can dehydrate hardware too!

...even the SD cards were wet!

...even the SD cards were wet!

The last photo my camera took before it died

The last photo my camera took before it died

Tada! Turns out you can dehydrate hardware too!

Tada! Turns out you can dehydrate hardware too!

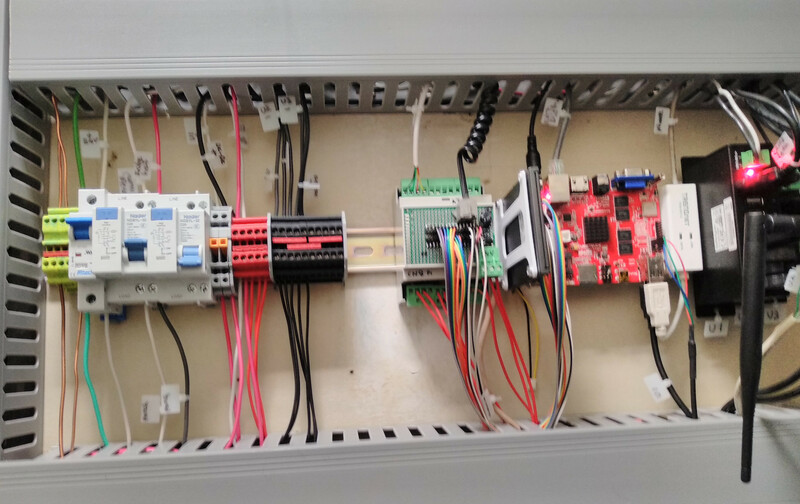

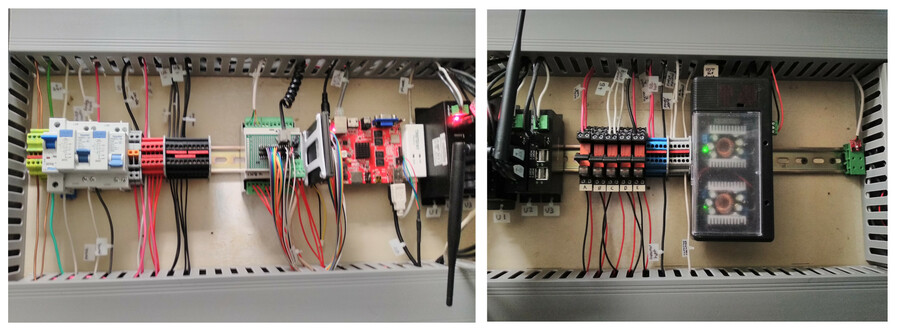

My offgrid house has an industrial automation panel.

My offgrid house has an industrial automation panel.

I started building this in February, before covid-19 was impacting us here,

when lots of mail orders were no big problem, and getting an unusual

3D-printed DIN rail bracket for a SSD was just a couple clicks.

I finished a month later, deep into social isolation and quarentine,

scrounging around the house for scrap wire, scavenging screws from unused

stuff and cutting them to size, and hoping I would not end up in a "need

just one more part that I can't get" situation.

It got rather elaborate, and working on it was often a welcome distraction

from the news when I couldn't concentrate on my usual work. I'm posting

this now because people sometimes tell me they like hearing about my offfgrid

stuff, and perhaps you could use a distraction too.

The panel has my house's computer on it, as well as both AC and DC power

distribution, breakers, and switching. Since the house is offgrid, the panel

is designed to let every non-essential power drain be turned off, from

my offgrid fridge to the 20 terabytes of

offline storage to the

inverter and satellite dish,

the spring pump for my gravity flow water system, and

even the power outlet by the kitchen sink.

Saving power is part of why I'm using old-school relays and stuff and not

IOT devices, the other reason is of course: IOT devices are horrible

dystopian e-waste. I'm taking the utopian Star Trek approach, where I

can command "full power to the vacuum cleaner!"

I started building this in February, before covid-19 was impacting us here,

when lots of mail orders were no big problem, and getting an unusual

3D-printed DIN rail bracket for a SSD was just a couple clicks.

I finished a month later, deep into social isolation and quarentine,

scrounging around the house for scrap wire, scavenging screws from unused

stuff and cutting them to size, and hoping I would not end up in a "need

just one more part that I can't get" situation.

It got rather elaborate, and working on it was often a welcome distraction

from the news when I couldn't concentrate on my usual work. I'm posting

this now because people sometimes tell me they like hearing about my offfgrid

stuff, and perhaps you could use a distraction too.

The panel has my house's computer on it, as well as both AC and DC power

distribution, breakers, and switching. Since the house is offgrid, the panel

is designed to let every non-essential power drain be turned off, from

my offgrid fridge to the 20 terabytes of

offline storage to the

inverter and satellite dish,

the spring pump for my gravity flow water system, and

even the power outlet by the kitchen sink.

Saving power is part of why I'm using old-school relays and stuff and not

IOT devices, the other reason is of course: IOT devices are horrible

dystopian e-waste. I'm taking the utopian Star Trek approach, where I

can command "full power to the vacuum cleaner!"

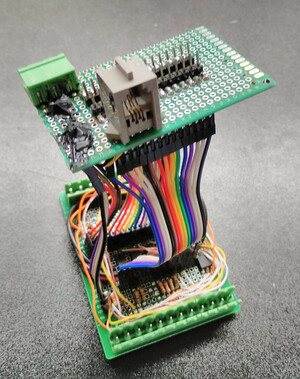

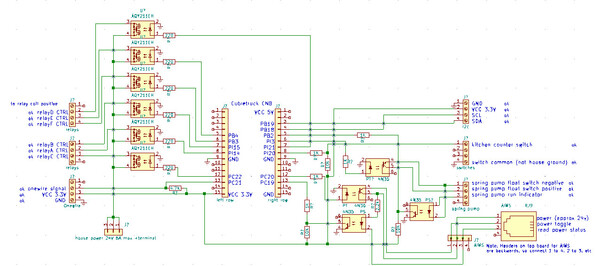

At the core of the panel, next to the cubietruck arm board, is a custom

IO daughterboard. Designed and built by hand to fit into a DIN mount case,

it uses every GPIO pin on the cubietruck's main GPIO header. Making

this board took 40+ hours, and was about half the project. It got

pretty tight in there.

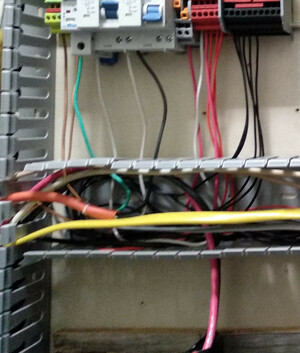

This was my first foray into DIN rail mount, and it really is industrial

lego -- a whole universe of parts that all fit together and are immensely

flexible. Often priced more than seems reasonable for a little bit of

plastic and metal, until you look at the spec sheets and the ratings.

(Total cost for my panel was $400.) It's odd that it's not more used

outside its niche -- I came of age in the Bay Area, surrounded by rack

mount equipment, but no DIN mount equipment. Hacking the hardware in

a rack is unusual, but DIN invites hacking.

Admittedly, this is a second system kind of project, replacing some

unsightly shelves full of gear and wires everywhere with something kind

of overdone. But should be worth it in the long run as new gear gets

clipped into place and it evolves for changing needs.

Also, wire gutters, where have you been all my life?

At the core of the panel, next to the cubietruck arm board, is a custom

IO daughterboard. Designed and built by hand to fit into a DIN mount case,

it uses every GPIO pin on the cubietruck's main GPIO header. Making

this board took 40+ hours, and was about half the project. It got

pretty tight in there.

This was my first foray into DIN rail mount, and it really is industrial

lego -- a whole universe of parts that all fit together and are immensely

flexible. Often priced more than seems reasonable for a little bit of

plastic and metal, until you look at the spec sheets and the ratings.

(Total cost for my panel was $400.) It's odd that it's not more used

outside its niche -- I came of age in the Bay Area, surrounded by rack

mount equipment, but no DIN mount equipment. Hacking the hardware in

a rack is unusual, but DIN invites hacking.

Admittedly, this is a second system kind of project, replacing some

unsightly shelves full of gear and wires everywhere with something kind

of overdone. But should be worth it in the long run as new gear gets

clipped into place and it evolves for changing needs.

Also, wire gutters, where have you been all my life?

Finally, if you'd like to know what everything on the DIN rail is, from

left to right: Ground block, 24v DC disconnect, fridge GFI, spare GFI, USB

hub switch, computer switch, +24v block, -24v block, IO daughterboard, 1tb

SSD, arm board, modem, 3 USB hubs, 5 relays, AC hot block, AC neutral

block, DC-DC power converters, humidity sensor.

Finally, if you'd like to know what everything on the DIN rail is, from

left to right: Ground block, 24v DC disconnect, fridge GFI, spare GFI, USB

hub switch, computer switch, +24v block, -24v block, IO daughterboard, 1tb

SSD, arm board, modem, 3 USB hubs, 5 relays, AC hot block, AC neutral

block, DC-DC power converters, humidity sensor.

Hey folks,

This is a quick blog post to tell you Bandcamp is waiving all their

fees on March 20th 2020 (PST). Spread the word, as every penny spent

on the platform that day will go back to the artists.

COVID-19 is throwing us all a mean curveball and artists have it especially

rough, particularly those who were in the middle of tours and had to cancel

them.

If you like Metal, Angry Metal Guy posted a very nice list of artists

you might know and want to help out.

If you are lucky enough to have a little coin around, now is the time to show

support for the artists you like. Buy an album you liked and copied from a

friend or get some merch to wear to your next virtual beer night with your

(remote) friends!

Stay safe and don't forget to wash your hands regularly.

Hey folks,

This is a quick blog post to tell you Bandcamp is waiving all their

fees on March 20th 2020 (PST). Spread the word, as every penny spent

on the platform that day will go back to the artists.

COVID-19 is throwing us all a mean curveball and artists have it especially

rough, particularly those who were in the middle of tours and had to cancel

them.

If you like Metal, Angry Metal Guy posted a very nice list of artists

you might know and want to help out.

If you are lucky enough to have a little coin around, now is the time to show

support for the artists you like. Buy an album you liked and copied from a

friend or get some merch to wear to your next virtual beer night with your

(remote) friends!

Stay safe and don't forget to wash your hands regularly.

Narabu is a new intraframe video codec. You may or may not want to read

part 1

first.

The GPU, despite being extremely more flexible than it was fifteen years

ago, is still a very different beast from your CPU, and not all problems

map well to it performance-wise. Thus, before designing a codec, it's

useful to know what our platform looks like.

A GPU has lots of special functionality for graphics (well, duh), but we'll

be concentrating on the compute shader subset in this context, ie., we

won't be drawing any polygons. Roughly, a GPU (as I understand it!) is built

up about as follows:

A GPU contains 1 20 cores; NVIDIA calls them SMs (shader multiprocessors),

Intel calls them subslices. (Trivia: A typical mid-range Intel GPU contains two cores,

and thus is designated GT2.) One such core usually runs the same program,

although on different data; there are exceptions, but typically, if your

program can't fill an entire core with parallelism, you're wasting energy.

Each core, in addition to tons (thousands!) of registers, also has some

shared memory (also called local memory sometimes, although that term

is overloaded), typically 32 64 kB, which you can think of in two ways:

Either as a sort-of explicit L1 cache, or as a way to communicate

internally on a core. Shared memory is a limited, precious resource in

many algorithms.

Each core/SM/subslice contains about 8 execution units (Intel

calls them EUs, NVIDIA/AMD calls them something else) and some memory

access logic. These multiplex a bunch of threads (say, 32) and run in

a round-robin-ish fashion. This means that a GPU can handle memory stalls

much better than a typical CPU, since it has so many streams to pick from;

even though each thread runs in-order, it can just kick off an operation

and then go to the next thread while the previous one is working.

Each execution unit has a bunch of ALUs (typically 16) and executes code in a SIMD

fashion. NVIDIA calls these ALUs CUDA cores , AMD calls them stream

processors . Unlike on CPU, this SIMD has full scatter/gather support

(although sequential access, especially in certain patterns, is much more efficient

than random access), lane enable/disable so it can work with conditional

code, etc.. The typically fastest operation is a 32-bit float muladd;

usually that's single-cycle. GPUs love 32-bit FP code. (In fact, in some

GPU languages, you won't even have 8-, 16-bit or 64-bit types. This is

annoying, but not the end of the world.)

The vectorization is not exposed to the user in typical code (GLSL has some

vector types, but they're usually just broken up into scalars, so that's a

red herring), although in some programming languages you can get to swizzle

the SIMD stuff internally to gain advantage of that (there's also schemes for

broadcasting bits by voting etc.). However, it is crucially important to

performance; if you have divergence within a warp, this means the GPU needs

to execute both sides of the if. So less divergent code is good.

Such a SIMD group is called a warp by NVIDIA (I don't know if the others have

names for it). NVIDIA has SIMD/warp width always 32; AMD used to be 64 but

is now 16. Intel supports 4 32 (the compiler will autoselect based on a bunch of

factors), although 16 is the most common.

The upshot of all of this is that you need massive amounts of parallelism

to be able to get useful performance out of a CPU. A rule of thumb is that

if you could have launched about a thousand threads for your problem on CPU,

it's a good fit for a GPU, although this is of course just a guideline.

There's a ton of APIs available to write compute shaders. There's CUDA (NVIDIA-only, but the

dominant player), D3D compute (Windows-only, but multi-vendor),

OpenCL (multi-vendor, but highly variable implementation quality),

OpenGL compute shaders (all platforms except macOS, which has too old drivers),

Metal (Apple-only) and probably some that I forgot. I've chosen to go for

OpenGL compute shaders since I already use OpenGL shaders a lot, and this

saves on interop issues. CUDA probably is more mature, but my laptop is

Intel. :-) No matter which one you choose, the programming model looks very

roughly like this pseudocode:

Narabu is a new intraframe video codec. You may or may not want to read

part 1

first.

The GPU, despite being extremely more flexible than it was fifteen years

ago, is still a very different beast from your CPU, and not all problems

map well to it performance-wise. Thus, before designing a codec, it's

useful to know what our platform looks like.

A GPU has lots of special functionality for graphics (well, duh), but we'll

be concentrating on the compute shader subset in this context, ie., we

won't be drawing any polygons. Roughly, a GPU (as I understand it!) is built

up about as follows:

A GPU contains 1 20 cores; NVIDIA calls them SMs (shader multiprocessors),

Intel calls them subslices. (Trivia: A typical mid-range Intel GPU contains two cores,

and thus is designated GT2.) One such core usually runs the same program,

although on different data; there are exceptions, but typically, if your

program can't fill an entire core with parallelism, you're wasting energy.

Each core, in addition to tons (thousands!) of registers, also has some

shared memory (also called local memory sometimes, although that term

is overloaded), typically 32 64 kB, which you can think of in two ways:

Either as a sort-of explicit L1 cache, or as a way to communicate

internally on a core. Shared memory is a limited, precious resource in

many algorithms.

Each core/SM/subslice contains about 8 execution units (Intel

calls them EUs, NVIDIA/AMD calls them something else) and some memory

access logic. These multiplex a bunch of threads (say, 32) and run in

a round-robin-ish fashion. This means that a GPU can handle memory stalls

much better than a typical CPU, since it has so many streams to pick from;

even though each thread runs in-order, it can just kick off an operation

and then go to the next thread while the previous one is working.

Each execution unit has a bunch of ALUs (typically 16) and executes code in a SIMD

fashion. NVIDIA calls these ALUs CUDA cores , AMD calls them stream

processors . Unlike on CPU, this SIMD has full scatter/gather support

(although sequential access, especially in certain patterns, is much more efficient

than random access), lane enable/disable so it can work with conditional

code, etc.. The typically fastest operation is a 32-bit float muladd;

usually that's single-cycle. GPUs love 32-bit FP code. (In fact, in some

GPU languages, you won't even have 8-, 16-bit or 64-bit types. This is

annoying, but not the end of the world.)

The vectorization is not exposed to the user in typical code (GLSL has some

vector types, but they're usually just broken up into scalars, so that's a

red herring), although in some programming languages you can get to swizzle

the SIMD stuff internally to gain advantage of that (there's also schemes for

broadcasting bits by voting etc.). However, it is crucially important to

performance; if you have divergence within a warp, this means the GPU needs

to execute both sides of the if. So less divergent code is good.

Such a SIMD group is called a warp by NVIDIA (I don't know if the others have

names for it). NVIDIA has SIMD/warp width always 32; AMD used to be 64 but

is now 16. Intel supports 4 32 (the compiler will autoselect based on a bunch of

factors), although 16 is the most common.

The upshot of all of this is that you need massive amounts of parallelism

to be able to get useful performance out of a CPU. A rule of thumb is that

if you could have launched about a thousand threads for your problem on CPU,

it's a good fit for a GPU, although this is of course just a guideline.

There's a ton of APIs available to write compute shaders. There's CUDA (NVIDIA-only, but the

dominant player), D3D compute (Windows-only, but multi-vendor),

OpenCL (multi-vendor, but highly variable implementation quality),

OpenGL compute shaders (all platforms except macOS, which has too old drivers),

Metal (Apple-only) and probably some that I forgot. I've chosen to go for

OpenGL compute shaders since I already use OpenGL shaders a lot, and this

saves on interop issues. CUDA probably is more mature, but my laptop is

Intel. :-) No matter which one you choose, the programming model looks very

roughly like this pseudocode:

for (size_t workgroup_idx = 0; workgroup_idx < NUM_WORKGROUPS; ++workgroup_idx) // in parallel over cores

char shared_mem[REQUESTED_SHARED_MEM]; // private for each workgroup

for (size_t local_idx = 0; local_idx < WORKGROUP_SIZE; ++local_idx) // in parallel on each core

main(workgroup_idx, local_idx, shared_mem);

except in reality, the indices will be split in x/y/z for your convenience

(you control all six dimensions, of course), and if you haven't asked for too

much shared memory, the driver can silently make larger workgroups if it

helps increase parallelity (this is totally transparent to you). main()

doesn't return anything, but you can do reads and writes as you wish;

GPUs have large amounts of memory these days, and staggering amounts of

memory bandwidth.

Now for the bad part: Generally, you will have no debuggers, no way of

logging and no real profilers (if you're lucky, you can get to know how long

each compute shader invocation takes, but not what takes time within the

shader itself). Especially the latter is maddening; the only real recourse

you have is some timers, and then placing timer probes or trying to comment

out sections of your code to see if something goes faster. If you don't

get the answers you're looking for, forget printf you need to set up a

separate buffer, write some numbers into it and pull that buffer down to

the GPU. Profilers are an essential part of optimization, and I had really

hoped the world would be more mature here by now. Even CUDA doesn't give

you all that much insight sometimes I wonder if all of this is because

GPU drivers and architectures are meant to be shrouded in mystery for

competitiveness reasons, but I'm honestly not sure.

So that's it for a crash course in GPU architecture. Next time, we'll start

looking at the Narabu codec itself.

Often, when I mention how things work in the interactive theorem prover [Isabelle/HOL] (in the following just Isabelle 1) to people with a strong background in functional programming (whether that means Haskell or Coq or something else), I cause confusion, especially around the issue of what is a function, are function total and what is the business with undefined. In this blog post, I want to explain some these issues, aimed at functional programmers or type theoreticians.

Note that this is not meant to be a tutorial; I will not explain how to do these things, and will focus on what they mean.

Often, when I mention how things work in the interactive theorem prover [Isabelle/HOL] (in the following just Isabelle 1) to people with a strong background in functional programming (whether that means Haskell or Coq or something else), I cause confusion, especially around the issue of what is a function, are function total and what is the business with undefined. In this blog post, I want to explain some these issues, aimed at functional programmers or type theoreticians.

Note that this is not meant to be a tutorial; I will not explain how to do these things, and will focus on what they mean.

f :: a b between two types a and b (the function arrow in Isabelle is , not ), then by definition of what it means to be a function in HOL whenever I have a value x :: a, then the expression f x (i.e. f applied to x) is a value of type b. Therefore, and without exception, every Isabelle function is total.

In particular, it cannot be that f x does not exist for some x :: a. This is a first difference from Haskell, which does have partial functions like

spin :: Maybe Integer -> Bool

spin (Just n) = spin (Just (n+1))spin Nothing nor the expression spin (Just 42) produce a value of type Bool: The former raises an exception ( incomplete pattern match ), the latter does not terminate. Confusingly, though, both expressions have type Bool.

Because every function is total, this confusion cannot arise in Isabelle: If an expression e has type t, then it is a value of type t. This trait is shared with other total systems, including Coq.

Did you notice the emphasis I put on the word is here, and how I deliberately did not write evaluates to or returns ? This is because of another big source for confusion:

a b in functional programming is an algorithm that, given a value of type a, calculates (returns, evaluates to) a value of type b.a b in math (or Isabelle) associates with each value of type a a value of type b.definition foo :: "(nat real) real" where

"foo seq = (if convergent seq then lim seq else 0)"fun plus :: "nat nat nat" where

"plus 0 m = m"

"plus (Suc n) m = Suc (plus n m)"which is clearly a description of a computation. But to Isabelle, the above readsplusis a function that analyses its first argument. If that is0, then it returns the second argument. Otherwise, it calls itself with the predecessor of the first argument and increases the result by one.

plus is a binary function on natural numbers, and it satisfies the following two equations:

And in fact, it is not so much Isabelle that reads it this way, but rather the fun command, which is external to the Isabelle logic. The fun command analyses the given equations, constructs a non-recursive definition of plus under the hood, passes that to Isabelle and then proves that the given equations hold for plus.

One interesting consequence of this is that different specifications can lead to the same functions. In fact, if we would define plus' by recursing on the second argument, we d obtain the the same function (i.e. plus = plus' is a theorem, and there would be no way of telling the two apart).

fun command can only construct plus in a way that the equations hold if it passes a termination check very much like Fixpoint in Coq.

But while the termination check of Fixpoint in Coq is a deep part of the basic logic, in Isabelle it is simply something that this particular command requires for its internal machinery to go through. At no point does a termination proof of the function exist as a theorem inside the logic. And other commands may have other means of defining a function that do not even require such a termination argument!

For example, a function specification that is tail-recursive can be turned in to a function, even without a termination proof: The following definition describes a higher-order function that iterates its first argument f on the second argument x until it finds a fixpoint. It is completely polymorphic (the single quote in 'a indicates that this is a type variable):

partial_function (tailrec)

fixpoint :: "('a 'a) 'a 'a"

where

"fixpoint f x = (if f x = x then x else fixpoint f (f x))"f with ( x. x-1), we can prove that it will always return 0:

lemma "fixpoint ( n . n - 1) (n::nat) = 0"

by (induction n) (auto simp add: fixpoint.simps)partial_function (option) collatz :: "nat nat list option"

where "collatz n =

(if n = 1 then Some [n]

else if even n

then do ns <- collatz (n div 2); Some (n # ns)

else do ns <- collatz (3 * n + 1); Some (n # ns) )"fixpoint be a total function? What is fixpoint ( n. n+1)? What if we run collatz n for a n where the Collatz sequence does not reach 1?3 We will come back to that question after a little detour

undefined :: 'aarbitrary or, even better, unknown.

Since undefined can be instantiated at any type, we can instantiate it for example at bool, and we can observe an important fact: undefined is not an extra value besides the usual ones . It is simply some value of that type, which is demonstrated in the following lemma:

lemma "undefined = True undefined = False" by autoundefined for sure:

lemma "undefined = ()" by autofromJust function:

fun fromSome :: "'a option 'a" where

"fromSome (Some x) = x"fun (albeit with a warning), and the generated function fromSome behaves exactly as specified: when applied to Some x, it is x. The term fromSome None is also a value of type 'a, we just do not know which one it is, as the specification does not address that.

So fromSome None behaves just like undefined above, i.e. we can prove

lemma "fromSome None = False fromSome None = True" by autofun constOrId :: "bool bool" where

"constOrId True = True"

lemma "constOrId = ( _.True) constOrId = ( x. x)"

by (metis (full_types) constOrId.simps)fixpoint above. Clearly, the function seen as a functional program is not total: When passed the argument ( n. n + 1) or ( b. b) it will loop forever trying to find a fixed point.

But Isabelle functions are not functional programs, and the definitions are just specifications. What does the specification say about the case when f has no fixed-point? It states that the equation fixpoint f x = fixpoint f (f x) holds. And this equation has a solution, for example fixpoint f _ = undefined.

Or more concretely: The specification of the fixpoint function states that fixpoint ( b. b) True = fixpoint ( b. b) False has to hold, but it does not specify which particular value (True or False) it should denote any is fine.

f and get a function out of that? But rest assured: That is not the case. For example, no Isabelle command allows you define a function bogus :: () nat with the equation bogus () = Suc (bogus ()), because this equation does not have a solution.

We can actually prove that such a function cannot exist:

lemma no_bogus: " bogus. bogus () = Suc (bogus ())" by simpnot_bogus () = not_bogus () is just fine )

undefined is not a separate, recognizable value, but rather simply an unknown one, there is no way of stating that A function result is not specified .

Here is an example that demonstrates this: Two partial functions (one with not all cases specified, the other one with a self-referential specification) are indistinguishable from the total variant:

fun partial1 :: "bool unit" where

"partial1 True = ()"

partial_function (tailrec) partial2 :: "bool unit" where

"partial2 b = partial2 b"

fun total :: "bool unit" where

"total True = ()"

"total False = ()"

lemma "partial1 = total partial2 = total" by auto () and partial2 = total. We have done that to verify some of HLint s equations.

fixpoint, which is not possible in Coq.

There is currently ongoing work about verified code generation, where the code equations are reflected into a deep embedding of HOL in Isabelle that would allow explicit termination proofs.

undefined in Coq

This section is speculative, and an invitation for discussion.

Coq already distinguishes between types used in programs (Set) and types used in proofs Prop.

Could Coq ensure that every t : Set is non-empty? I imagine this would require additional checks in the Inductive command, similar to the checks that the Isabelle command datatype has to perform4, and it would disallow Empty_set.

If so, then it would be sound to add the following axiom

Axiom undefined : forall (a : Set), a._ undefined. Would it help with non-obviously terminating functions? Would it allow a Coq command Tailrecursive that accepts any tailrecursive function without a termination check?

fun, the constructions by datatype are not part of the logic, but create a type definition from more primitive notions that is isomorphic to the specified data type. domain=int.foobar.example

dhcp-range=192.168.0.240,192.168.0.242,255.255.255.0,1h

dhcp-boot=bootnetx64.efi

pxe-service=X86-64_EFI, "Boot UEFI PXE-64", bootnetx64.efi

enable-tftp

tftp-root=/usr/lib/debian-installer/images/8/amd64/text

dhcp-option=3,192.168.0.1

dhcp-host=00:c0:ff:ee:00:01,192.168.0.123,foobar-01

set default="0"

set timeout=5

linux /debian-installer/amd64/linux vga=788 --- auto=true interface=eth1 netcfg/dhcp_timeout=60 netcfg/choose_interface=eth1 priority=critical preseed/url=tftp://192.168.0.2/preseed.cfg quiet

# auto method must be lvm

d-i partman-auto/method string lvm

d-i partman-lvm/device_remove_lvm boolean true

d-i partman-md/device_remove_md boolean true

d-i partman-lvm/confirm boolean true

d-i partman-lvm/confirm_nooverwrite boolean true

d-i partman-basicfilesystems/no_swap boolean false

# Keep that one set to true so we end up with a UEFI enabled

# system. If set to false, /var/lib/partman/uefi_ignore will be touched

d-i partman-efi/non_efi_system boolean true

# enforce usage of GPT - a must have to use EFI!

d-i partman-basicfilesystems/choose_label string gpt

d-i partman-basicfilesystems/default_label string gpt

d-i partman-partitioning/choose_label string gpt

d-i partman-partitioning/default_label string gpt

d-i partman/choose_label string gpt

d-i partman/default_label string gpt

d-i partman-auto/choose_recipe select boot-root-all

d-i partman-auto/expert_recipe string \

boot-root-all :: \

538 538 1075 free \

$iflabel gpt \

$reusemethod \

method efi \

format \

. \

128 512 256 ext2 \

$defaultignore \

method format format \

use_filesystem filesystem ext2 \

mountpoint /boot \

. \

1024 4096 15360 ext4 \

$lvmok \

method format format \

use_filesystem filesystem ext4 \

mountpoint / \

. \

1024 4096 15360 ext4 \

$lvmok \

method format format \

use_filesystem filesystem ext4 \

mountpoint /var \

. \

1024 1024 -1 ext4 \

$lvmok \

method format format \

use_filesystem filesystem ext4 \

mountpoint /var/lib \

.

# This makes partman automatically partition without confirmation, provided

# that you told it what to do using one of the methods above.

d-i partman-partitioning/confirm_write_new_label boolean true

d-i partman/choose_partition select finish

d-i partman-md/confirm boolean true

d-i partman/confirm boolean true

d-i partman/confirm_nooverwrite boolean true

# This is fairly safe to set, it makes grub install automatically to the MBR

# if no other operating system is detected on the machine.

d-i grub-installer/only_debian boolean true

d-i grub-installer/with_other_os boolean true

d-i grub-installer/bootdev string /dev/sda

Recently Rob and I visited

Soile and Lars. We had a lovely

time wandering around Helsinki with them, and I also spent a good chunk of time

with Lars working on some design and planning for the

Yarn test specification and tooling.

You see, I wrote a Rust implementation of Yarn

called rsyarn "for fun" and in doing

so I noted a bunch of missing bits in the understanding Lars and I shared about

how Yarn should work. Lars and I filled, and re-filled, a whiteboard with

discussion about what the 'Yarn specification' should be, about various

language extensions and changes, and also about what functionality a normative

implementation of Yarn should have.

This article is meant to be a write-up of all of that discussion, but before I

start on that, I should probably summarise what Yarn is.

Recently Rob and I visited

Soile and Lars. We had a lovely

time wandering around Helsinki with them, and I also spent a good chunk of time

with Lars working on some design and planning for the

Yarn test specification and tooling.

You see, I wrote a Rust implementation of Yarn

called rsyarn "for fun" and in doing

so I noted a bunch of missing bits in the understanding Lars and I shared about

how Yarn should work. Lars and I filled, and re-filled, a whiteboard with

discussion about what the 'Yarn specification' should be, about various

language extensions and changes, and also about what functionality a normative

implementation of Yarn should have.

This article is meant to be a write-up of all of that discussion, but before I

start on that, I should probably summarise what Yarn is.

/bin/true tool:

SCENARIO true should exit with code zero

WHEN /bin/true is run with no arguments

THEN the exit code is 0

AND stdout is empty

AND stderr is empty

IMPLEMENTS, for example:

IMPLEMENTS WHEN (\S+) is run with no arguments

set +e

"$ MATCH_1 " > "$ DATADIR /stdout" 2> "$ DATADIR /stderr"

echo $? > "$ DATADIR /exitcode"

IMPLEMENTS can use regular expressions

to capture parts of their invocation, allowing the test implementer to handle

many different scenario statements with one implementation block. For the rest

of the implementation, whatever you assume about things will probably be okay

for now.

SCENARIO, GIVEN, WHEN, THEN,

ASSUMING, FINALLY, AND, IMPLEMENTS, EXAMPLE, ...)IMPLEMENTS current directory, data directory, home directory, and also

environment.IMPLEMENTSIMPLEMENTS per statementASSUMING at the end, or FINALLY at the

start)IMPLEMENTS (and arguments for them)ASSUMING statements lead to an errorIMPLEMENTScmdtest (and which I shall refer to as pyyarn for now) and

my Rust based one (rsyarn).

rsyarn supports, but pyyarn does not, is running multiple

scenarios in parallel. However when I wrote that support into rsyarn I

noticed that there were plenty of issues with running stuff in parallel. (A

problem I'm sure any of you who know about threads will appreciate).

One particular issue was that scenarios often need to share resources which

are not easily sandboxed into the $ DATADIR provided by Yarn. For example

databases or access to limited online services. Lars and I had a good chat

about that, and decided that a reasonable language extension could be:

USING database foo

RESOURCE database (\S+)

LABEL database-$1

GIVEN a database called $1

FINALLY database $1 is torn down

USING statement should be reasonably clear in its pairing to a RESOURCE

statement. The LABEL statement I'll get to in a moment (though it's only

relevant in a RESOURCE block, and the rest of the statements are essentially

substituted into the calling scenario at the point of the USING.

This is nowhere near ready to consider adding to the specification though.

Both Lars and I are uncomfortable with the $1 syntax though we can't think of

anything nicer right now; and the USING/RESOURCE/LABEL vocabulary isn't

set in stone either.

The idea of the LABEL is that we'd also require that a normative Yarn

implementation be capable of specifying resource limits by name. E.g. if a

RESOURCE used a LABEL foo then the caller of a Yarn scenario suite could

specify that there were 5 foos available. The Yarn implementation would then

schedule a maximum of 5 scenarios which are using that label to happen

simultaneously. At bare minimum it'd gate new users, but at best it would

intelligently schedule them.

In addition, since this introduces the concept of parallelism into Yarn proper,

we also wanted to add a maximum parallelism setting to the Yarn implementation

requirements; and to specify that any resource label which was not explicitly

set had a usage limit of 1.

CALLING write foo

EXPANDING write (\S+)

GIVEN bar

WHEN $1 is written to

THEN success was had by all

CALLING/EXPANDING keywords are not fixed yet, nor is the $1

type syntax, though whatever is used here should match the other places where

we might want it.

GIVEN foo

... bar

... baz

GIVEN foo bar baz

YAML into a Yarn scenario is a pain, as would be including any

amount of another whitespace-sensitive input language.

After a lot of to-ing and fro-ing, we decided that the right thing to do would

be to redefine the ... Yarn statement to be whitespace preserving and to then

pass that whitespace through to be matched by the IMPLEMENTS or whatever. In

order for that to work, the regexp matching would have to be defined to treat

the input as a single line, allowing . to match \n etc.

Of course, this would mean that the old functionality wouldn't be possible, so

we considered allowing a \ at the end of a line to provide the current kind

of behaviour, rewriting the above example as:

GIVEN foo \

bar \

baz

... in any of

our Yarn suites where having the whitespace preserved would be an issue, we

decided it was worth the pain.

pyyarn and rsyarn and from there we can implement

our new proposals for extending Yarn to be even more useful.

| Publisher: | HarperCollins |

| Copyright: | February 2011 |

| ISBN: | 0-06-207881-X |

| Format: | Kindle |

| Pages: | 451 |

The motor drive attaches to the camera using the tripod socket, a replacement tripod socket is provided on the base of plate. There s also a metal plate with the bottom of the hand grip attached to it held on to the base plate with a thumb screw. When this is released it gives access to the screw holding in the battery compartment which (very conveniently) takes 6 AA batteries. This also provides power to the camera body when attached.

The motor drive attaches to the camera using the tripod socket, a replacement tripod socket is provided on the base of plate. There s also a metal plate with the bottom of the hand grip attached to it held on to the base plate with a thumb screw. When this is released it gives access to the screw holding in the battery compartment which (very conveniently) takes 6 AA batteries. This also provides power to the camera body when attached.

On the back of the base of the camera there s a button with a red LED next to it which illuminates slightly when the button is pressed (it s visible in low light only). I m not 100% sure what this is for, I d have guessed a battery check if the light were easier to see.

On the back of the base of the camera there s a button with a red LED next to it which illuminates slightly when the button is pressed (it s visible in low light only). I m not 100% sure what this is for, I d have guessed a battery check if the light were easier to see.

On the top of the camera there is a hot shoe (with a plastic blanking plate, a nice touch), a mode selector and two buttons. The larger button on the front replicates the shutter release button on the body (which continues to function as well) while the smaller button to the rear of the camera controls the motor depending on the current state of the camera it cocks the shutter, winds the film and resets the mirror when it is locked up. The mode dial offers three modes: off, S and C. S and C appear to correspond to the S and C modes of the main camera, single and continuous mirror lockup shots.

Overall with this grip fitted and a prism attached the camera operates very similarly to a 35mm SLR in terms of film winding and so on. It is of course heavier (the whole setup weighs in at 2.5kg) but balanced very well and the grip is very comfortable to use.

On the top of the camera there is a hot shoe (with a plastic blanking plate, a nice touch), a mode selector and two buttons. The larger button on the front replicates the shutter release button on the body (which continues to function as well) while the smaller button to the rear of the camera controls the motor depending on the current state of the camera it cocks the shutter, winds the film and resets the mirror when it is locked up. The mode dial offers three modes: off, S and C. S and C appear to correspond to the S and C modes of the main camera, single and continuous mirror lockup shots.

Overall with this grip fitted and a prism attached the camera operates very similarly to a 35mm SLR in terms of film winding and so on. It is of course heavier (the whole setup weighs in at 2.5kg) but balanced very well and the grip is very comfortable to use.

cloud-init process on the agent. This automatic configuration may optionally serve to bootstrap the instance into a more complete configuration management system. The user may or may not ever actually log in to the system at all.fai-server package on your instance and clone the fai-cloud-images git repository. (I'll assume the repository is cloned to /srv/fai/config.) In order to generate your own disk image that generally matches what we've been distributing, you'll use a command like:

sudo fai-diskimage --hostname stretch-image --size 8G \

--class DEBIAN,STRETCH,AMD64,GRUB_PC,DEVEL,CLOUD,EC2 \

/tmp/stretch-image.raw

/tmp/stretch-image.raw, create some partitions and filesystems within it, and install and configure a bunch of packages into it. Exactly what packages it installs and how it configures them will be determined by the FAI config tree and the classes provided on the command line. The package_config subdirectory of the FAI configuration contains several files, the names of which are FAI classes. Activating a given class by referencing it on the fai-diskimage command line instructs FAI to process the contents of the matching package_config file if such a file exists. The files use a simple grammar that provides you with the ability to request certain packages to be installed or removed.

Let's say for example that you'd like to build a custom image that looks mostly identical to Debian's images, but that also contains the Apache HTTP server. You might do that by introducing a new file to package_config/HTTPD file, as follows:

PACKAGES install

apache2

fai-diskimage, you'll add HTTPD to the list of classes:

sudo fai-diskimage --hostname stretch-image --size 8G \

--class DEBIAN,STRETCH,AMD64,GRUB_PC,DEVEL,CLOUD,EC2,HTTPD \

/tmp/stretch-image.raw

files subdirectory based on classes. The scripts/EC2/10-apt script provides a basic example of using fcopy to select and install an apt sources.list file. The files/etc/apt/sources.list/ subdirectory contains both an EC2 and a GCE file. Since we've enabled the EC2 class on our command line, fcopy will find and install that file. You'll notice that the sources.list subdirectory also contains a preinst file, which fcopy can use to perform additional actions prior to actually installing the specified file. postinst scripts are also supported.

Beyond package and file installation, FAI also provides mechanisms to support debconf preseeding, as well as hooks that are executed at various stages of the image generation process. I recommend following the examples in the fai-cloud-images repo, as well as the FAI guide for more details. I do have one caveat regarding the documentation, however: FAI was originally written to help provision bare-metal systems, and much of its documentation is written with that use case in mind. The cloud image generation process is able to ignore a lot of the complexity of these environments (for example, you don't need to worry about pxeboot and tftp!) However, this means that although you get to ignore probably half of the FAI Guide, it's not immediately obvious which half it is that you get to ignore.

Once you've generated your raw image, you can inspect it by telling Linux about the partitions contained within, and then mount and examine the filesystems. For example:

admin@ip-10-0-0-64:~$ sudo partx --show /tmp/stretch-image.raw

NR START END SECTORS SIZE NAME UUID

1 2048 16777215 16775168 8G ed093314-01

admin@ip-10-0-0-64:~$ sudo partx -a /tmp/stretch-image.raw

partx: /dev/loop0: error adding partition 1

admin@ip-10-0-0-64:~$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

xvda 202:0 0 8G 0 disk

xvda1 202:1 0 1007.5K 0 part

xvda2 202:2 0 8G 0 part /

loop0 7:0 0 8G 0 loop

loop0p1 259:0 0 8G 0 loop

admin@ip-10-0-0-64:~$ sudo mount /dev/loop0p1 /mnt/

admin@ip-10-0-0-64:~$ ls /mnt/

bin/ dev/ home/ initrd.img.old@ lib64/ media/ opt/ root/ sbin/ sys/ usr/ vmlinuz@

boot/ etc/ initrd.img@ lib/ lost+found/ mnt/ proc/ run/ srv/ tmp/ var/ vmlinuz.old@

dd to write your image to the secondary volume, e.g. sudo dd if=/tmp/stretch-image.raw of=/dev/xvdbvolume-to-ami.sh script in the fail-cloud-image repo to snapshot the volume and register the resulting snapshot with AWS as a new AMI. Example: ./volume-to-ami.sh vol-04351c30c46d7dd6evolume-to-ami.sh script must be run with access to AWS credentials that grant access to several EC2 API calls: describe-snapshots, create-snapshot, and register-image. It recognizes a --help command-line flag and several options that modify characteristics of the AMI that it registers. When volume-to-ami.sh completes, it will print the AMI ID of your new image. You can now work with this image using standard AWS workflows.

As always, we welcome feedback and contributions via the debian-cloud mailing list or #debian-cloud on IRC.

Before starting, have to say hindsight as they say is always 20/20. I was moaning about my 6/7 hour trip few blog posts back but now came to know about the 17.5 hr. flights (17.5x800km/hr=14000 km.) which are happening around me.

I would say I was whining about nothing seeing those flights. I can t even imagine how people would feel in those flights. Six hours were too much in the tin-can, thankfully though I was in the aisle seat. In 14 hours most people would probably give to Air rage .

I just saw an excellent article on the subject. I also came to know that seat-selection and food on a long-haul flights are a luxury, hence that changes the equation quite a bit as well. So on these facts, it seems Qatar Airways treated me quite well as I was able to use both those options.

Disclaimer My knowledge about birds/avian is almost non-existent, Hence feel free to correct me if I do go wrong anywhere.

Coming back to earth literally

Before starting, have to say hindsight as they say is always 20/20. I was moaning about my 6/7 hour trip few blog posts back but now came to know about the 17.5 hr. flights (17.5x800km/hr=14000 km.) which are happening around me.

I would say I was whining about nothing seeing those flights. I can t even imagine how people would feel in those flights. Six hours were too much in the tin-can, thankfully though I was in the aisle seat. In 14 hours most people would probably give to Air rage .

I just saw an excellent article on the subject. I also came to know that seat-selection and food on a long-haul flights are a luxury, hence that changes the equation quite a bit as well. So on these facts, it seems Qatar Airways treated me quite well as I was able to use both those options.

Disclaimer My knowledge about birds/avian is almost non-existent, Hence feel free to correct me if I do go wrong anywhere.

Coming back to earth literally , I will have to share a bit of South Africa as that is part and parcel of what I m going to share next. Also many of the pictures shared in this particular blog post belong to KK who has shared them with me with permission to share it with the rest of the world.

When I was in South Africa, in the first couple of days as well as what little reading of South African History I had read before travelling, had known that the Europeans, specifically the Dutch ruled on South Africa for many years.

What was shared to me in the first day or two that Afrikaans is mostly spoken by Europeans still living in South Africa, some spoken by the coloured people as well. This tied in with the literature I had already read.

The Wikipedia page shares which language is spoken by whom and how the demographics play out if people are interested to know that.

One of the words or part of the word for places we came to know is bosch as is used in many a places. Bosch means wood or forest. After this we came to know about many places which were known as somethingbosch which signified to us that area is or was a forest.

On the second/third day Chirayu (pictured, extreme left) shared the idea of going to Eagle Encounters. Other people pictured in the picture are yours truly, some of the people from GSOC, KK is in the middle, the driver Leonard something who took us to Eagle Encounters on the right (pictured extreme right).

Update I was informed that it was a joint plan between Chirayu and KK. They also had some other options planned which later got dropped by the wayside.

, I will have to share a bit of South Africa as that is part and parcel of what I m going to share next. Also many of the pictures shared in this particular blog post belong to KK who has shared them with me with permission to share it with the rest of the world.

When I was in South Africa, in the first couple of days as well as what little reading of South African History I had read before travelling, had known that the Europeans, specifically the Dutch ruled on South Africa for many years.

What was shared to me in the first day or two that Afrikaans is mostly spoken by Europeans still living in South Africa, some spoken by the coloured people as well. This tied in with the literature I had already read.

The Wikipedia page shares which language is spoken by whom and how the demographics play out if people are interested to know that.

One of the words or part of the word for places we came to know is bosch as is used in many a places. Bosch means wood or forest. After this we came to know about many places which were known as somethingbosch which signified to us that area is or was a forest.

On the second/third day Chirayu (pictured, extreme left) shared the idea of going to Eagle Encounters. Other people pictured in the picture are yours truly, some of the people from GSOC, KK is in the middle, the driver Leonard something who took us to Eagle Encounters on the right (pictured extreme right).

Update I was informed that it was a joint plan between Chirayu and KK. They also had some other options planned which later got dropped by the wayside.

Tidbit Just a few years ago, it was a shocker to me to know/realize that what commonly goes/known in the country as a parrot by most people is actually a Parakeet. As can be seen in the article linked, they are widely distributed in India.

While I was young, I used to see the rose-ringed parakeets quite a bit around but nowadays due to probably pollution and other factors, they are noticeably less. They are popular as pets in India. I don t know what Pollito would think about that, don t think he would think good.

Tidbit Just a few years ago, it was a shocker to me to know/realize that what commonly goes/known in the country as a parrot by most people is actually a Parakeet. As can be seen in the article linked, they are widely distributed in India.

While I was young, I used to see the rose-ringed parakeets quite a bit around but nowadays due to probably pollution and other factors, they are noticeably less. They are popular as pets in India. I don t know what Pollito would think about that, don t think he would think good.

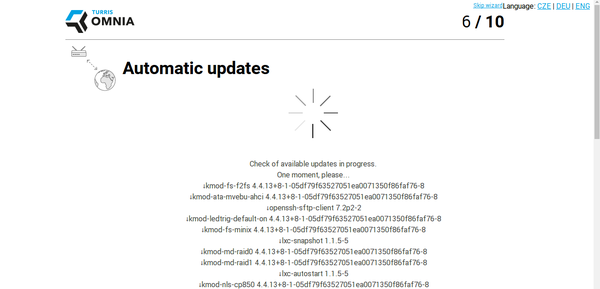

The Omnia router is a followup project on CZ.NIC's original

research project, the Turris. The goal of the project was to

identify hostile traffic on end-user networks and develop global

responses to those attacks across every monitored device. The Omnia is

an extension of the original project: more features were added and

data collection is now opt-in. Whereas the original Turris was simply

a home router, the new Omnia router includes:

The Omnia router is a followup project on CZ.NIC's original

research project, the Turris. The goal of the project was to

identify hostile traffic on end-user networks and develop global

responses to those attacks across every monitored device. The Omnia is

an extension of the original project: more features were added and

data collection is now opt-in. Whereas the original Turris was simply

a home router, the new Omnia router includes:

uname -a). It is unclear why those patches are necessary since the

ARMv7 Armada 385 CPU has been

supported in Linux since at least 4.2-rc1, but it is common for

OpenWRT ports to ship patches to the kernel, either to backport

missing functionality or perform some optimization.

There has been some pressure from backers to petition Turris to

"speedup the process of upstreaming Omnia support to OpenWrt". It

could be that the team is too busy with delivering the devices already

ordered to complete that process at this point. The software is

available on the CZ-NIC GitHub repository and the actual Linux

patches can be found here and here. CZ.NIC also operates a

private GitLab instance where more software is available. There is

technically no reason why you wouldn't be able to run your own

distribution on the Omnia router: OpenWRT development snapshots should

be able to run on the Omnia hardware and some people have

installed Debian on Omnia. It may require some customization

(e.g. the kernel) to make sure the Omnia hardware is correctly

supported. Most people seem to prefer to run TurrisOS because of

the extra features.

The hardware itself is also free and open for the most part. There is

a binary blob needed for the 5GHz wireless card, which seems to be the

only proprietary component on the board. The schematics of the device

are available through the Omnia wiki, but oddly not in the GitHub

repository like the rest of the software.

For example, the wizard, once connected to the network, goes through a

full system upgrade and will, by default, automatically upgrade itself

(including reboots) when new updates become available. Users have to

opt-in to the automatic updates, and can chose to automate only the

downloading and installation of the updates without having the device

reboot on its own. Reboots are also performed during user-specified time

frames (by default, Omnia applies kernel updates during the night). I

also liked the "skip" button that allowed me to completely bypass the

wizard and configure the device myself, through the regular OpenWRT

systems (like LuCI or SSH) if I needed to.

For example, the wizard, once connected to the network, goes through a

full system upgrade and will, by default, automatically upgrade itself

(including reboots) when new updates become available. Users have to

opt-in to the automatic updates, and can chose to automate only the

downloading and installation of the updates without having the device

reboot on its own. Reboots are also performed during user-specified time

frames (by default, Omnia applies kernel updates during the night). I

also liked the "skip" button that allowed me to completely bypass the

wizard and configure the device myself, through the regular OpenWRT

systems (like LuCI or SSH) if I needed to.

Notwithstanding the antenna connectors themselves, the hardware is

nice. I ordered the black metal case, and I must admit I love the

many LED lights in the front. It is especially useful to have color

changes in the reset procedure: no more guessing what state the

device is in or if I pressed the reset button long enough. The LEDs

can also be dimmed to reduce the glare that our electronic devices

produce.

All this comes at a price, however: at \$250 USD, it is a much higher

price tag than common home routers, which typically go for around

\$50. Furthermore, it may be difficult to actually get the device,

because no orders are being accepted on the Indiegogo site after

October 31. The Turris team doesn't actually want to deal with retail

sales and has now delegated retail sales to other stores, which

are currently limited to European deliveries.

Notwithstanding the antenna connectors themselves, the hardware is

nice. I ordered the black metal case, and I must admit I love the

many LED lights in the front. It is especially useful to have color

changes in the reset procedure: no more guessing what state the

device is in or if I pressed the reset button long enough. The LEDs

can also be dimmed to reduce the glare that our electronic devices

produce.

All this comes at a price, however: at \$250 USD, it is a much higher

price tag than common home routers, which typically go for around

\$50. Furthermore, it may be difficult to actually get the device,

because no orders are being accepted on the Indiegogo site after

October 31. The Turris team doesn't actually want to deal with retail

sales and has now delegated retail sales to other stores, which

are currently limited to European deliveries.

Note: this article first appeared in the Linux Weekly News.

(It is a gross oversimplification, but for the purposes of OpenStack CI, Jenkins is pretty much used as a glorified ssh/scp wrapper. Zuul Version 3, under development, is working to remove the need for Jenkins to be involved at all).Well some recent security issues with Jenkins and other changes has led to a roll-out of what is being called Zuul 2.5, which has indeed removed Jenkins and makes extensive use of Ansible as the basis for running CI tests in OpenStack. Since I already had the diagram, it seems worth updating it for the new reality.

The process starts when a developer uploads their code to gerrit via the git-review tool. There is no further action required on their behalf and the developer simply waits for results of their jobs.

Gerrit provides a JSON-encoded "fire-hose" output of everything happening to it. New reviews, votes, updates and more all get sent out over this pipe. Zuul is the overall scheduler that subscribes itself to this information and is responsible for managing the CI jobs appropriate for each change.

Zuul has a configuration that tells it what jobs to run for what projects. Zuul can do lots of interesting things, but for the purposes of this discussion we just consider that it puts the jobs it wants run into gearman for a launcher to consume. gearman is a job-server; as they explain it "[gearman] provides a generic application framework to farm out work to other machines or processes that are better suited to do the work". Zuul puts into gearman basically a tuple (job-name, node-type) for each job it wants run, specifying the unique job name to run and what type of node it should be run on.

A group of Zuul launchers are subscribed to gearman as workers. It is these Zuul launchers that will consume the job requests from the queue and actually get the tests running. However, a launcher needs two things to be able to run a job a job definition (what to actually do) and a worker node (somewhere to do it). The first part what to do is provided by job-definitions stored in external YAML files. The Zuul launcher knows how to process these files (with some help from Jenkins Job Builder, which despite the name is not outputting XML files for Jenkins to consume, but is being used to help parse templates and macros within the generically defined job definitions). Each Zuul launcher gets these definitions pushed to it constantly by Puppet, thus each launcher knows about all the jobs it can run automatically. Of course Zuul also knows about these same job definitions; this is the job-name part of the tuple we said it put into gearman. The second part somewhere to run the test takes some more explaining. To the next point...

Several cloud companies donate capacity in their clouds for OpenStack to run CI tests. Overall, this capacity is managed by a customized management tool called nodepool (you can see the details of this capacity at any given time by checking the nodepool configuration). Nodepool watches the gearman queue and sees what requests are coming out of Zuul. It looks at node-type of jobs in the queue (i.e. what platform the job has requested to run on) and decides what types of nodes need to start and which cloud providers have capacity to satisfy demand. Nodepool will start fresh virtual machines (from images built daily as described in the prior post), monitor their start-up and, when they're ready, put a new "assignment job" back into gearman with the details of the fresh node. One of the active Zuul launchers will pick up this assignment job and register the new node to itself.

At this point, the Zuul launcher has what it needs to actually get jobs started. With an fresh node registered to it and waiting for something to do, the Zuul launcher can advertise its ability to consume one of the waiting jobs from the gearman queue. For example, if a ubuntu-trusty node is provided to the Zuul launcher, the launcher can now consume from gearman any job it knows about that is intended to run on an ubuntu-trusty node type. If you're looking at the launcher code this is driven by the NodeWorker class you can see this being created in response to an assignment via LaunchServer.assignNode. To actually run the job where the "job hits the metal" as it were the Zuul launcher will dynamically construct an Ansible playbook to run. This playbook is a concatenation of common setup and teardown operations along with the actual test scripts the jobs wants to run. Using Ansible to run the job means all the flexibility an orchestration tool provides is now available to the launcher. For example, there is a custom console streamer library that allows us to live-stream the console output for the job over a plain TCP connection, and there is the possibility to use projects like ARA for visualisation of CI runs. In the future, Ansible will allow for better coordination when running multiple-node testing jobs after all, this is what orchestration tools such as Ansible are made for! While the Ansible run can be fairly heavyweight (especially when you're talking about launching thousands of jobs an hour), the system scales horizontally with more launchers able to consume more work easily. When checking your job results on logs.openstack.org you will see a _zuul_ansible directory now which contains copies of the inventory, playbooks and other related files that the launcher used to do the test run.

Eventually, the test will finish. The Zuul launcher will put the result back into gearman, which Zuul will consume (log copying is interesting but a topic for another day). The testing node will be released back to nodepool, which destroys it and starts all over again nodes are not reused and also have no sensitive details on them, as they are essentially publicly accessible. Zuul will wait for the results of all jobs for the change and post the result back to Gerrit; it either gives a positive vote or the dreaded negative vote if required jobs failed (it also handles merges to git, but that is also a topic for another day).

I put some packages together this weekend. It s been a while since I ve debuilt anything officially.

I put some packages together this weekend. It s been a while since I ve debuilt anything officially.

Flying over the airport at Moundridge, KS

Memories

Back when I was a child, maybe about the age my children are now, I d be outside in the evening and see this orange plane flying overhead. Our neighbor Don had a small ultralight plane and a grass landing strip next to his house. I remember longing to be up in the sky with Don, exploring the world from up there. At that age, I didn t know all the details of why that wouldn t work I just knew I wanted to ride in it.

It wasn t until I was about 11 that I flew for the first time. I still remember that TWA flight with my grandma, taking off early in the morning and flying just a little ways above the puffy clouds lit up all yellow and orange by the sunrise. Even 25 years later, that memory still holds as one of the most beautiful scenes I have ever seen.

Exploring

I have always been an explorer.

When I go past something interesting, I love to go see what it looks like inside. I enjoy driving around Kansas with Laura, finding hidden waterfalls, old county courthouses, ghost towns, beautiful old churches, even small-town restaurants. I explore things around me, too once taking apart a lawnmower engine as a child, nowadays building HF antennas in my treetops or writing code for Linux. If there is little to learn about something, it becomes less interesting to me.

I see this starting to build in my children, too. Since before they could walk, if we were waiting for something in a large building, we d go exploring.

A patch of rain over Hillsboro, KS

The New World

A pilot once told me, Nobody can become a pilot without it changing the way they see the world and then, changing their life.

I doubted that. But it was true. One of the most poetic sights I know is flying a couple thousand feet above an interstate highway at night, following it to my destination. All those red and white lights, those metal capsules of thousands of lives and thousands of stories, stretching out as far as the eye can see in either direction.

Kansas sunset from the plane

When you re in a plane, that small town nowhere near a freeway that always seemed so far away suddenly is only a 15-minute flight away, not even enough time to climb up to a high cruise altitude. Two minutes after takeoff, any number of cities that are an hour s drive away are visible simultaneously, their unique features already recognizable: a grain elevator, oil refinery, college campus, lake, whatever.

And all the houses you fly over each with people in them. Some pretty similar to you, some apparently not. But pretty soon you realize that we all are humans, and we aren t all that different. You can t tell a liberal from a conservative from the sky, nor a person s race or religion, nor even see the border between states. Towns and cities are often nameless from the sky, unless you re really low; only your navigation will tell you where you are.

I ve had the privilege to fly to small out-of-the-way airports, the kind that have a car that pilots can use for free to go into town and get lunch, and leave the key out for them. There I ve met many friendly people. I ve also landed my little Cessna at a big commercial airport where I probably used only 1/10th of the runway, on a grass runway that was barely maintained at all. I ve flown to towns I d driven to or through many times, discovering the friendly folks at the small airport out of town. I ve flown to parts of Kansas I ve never been to before, discovered charming old downtowns and rolling hills, little bursts of rain and beautiful sunsets that seem to turn into a sea.

Parked at the Smith Center, KS airport terminal, about to meet some wonderful people

For a guy that loves exploring the nooks and crannies of the world that everyone else drives by on their way to a major destination, being a pilot has meant many soul-filling moments.

Hard Work

I knew becoming a pilot would be a lot of hard work, and thankfully I remembered stories like that when I finally concluded it would be worth it. I found that I had an aptitude for a lot of things that many find difficult about being a pilot: my experience with amateur radio made me a natural at talking to ATC, my fascination with maps and navigation meant I already knew how to read aviation sectional maps before I even started my training and knew how to process that information in the cockpit, my years as a system administrator and programmer trained me with a careful and methodical decision-making process. And, much to the surprise of my flight instructor, I couldn t wait to begin the part of training about navigating using VORs (VHF radio beacons). I guess he, like many student pilots, had struggled with that, but I was fascinated by this pre-GPS technology (which I still routinely use in my flight planning, as a backup in case the GPS constellation or a GPS receiver fails). So that left the reflexes of flight, the art of it, as the parts I had to work on the hardest.

The exam with the FAA is not like getting your driver s license. It s a multi-stage and difficult process. So when the FAA Designated Pilot Examiner said congratulations, pilot! and later told my flight instructor that you did a really good job with this one, I felt a true sense of accomplishment.

Some of my prep materials

Worth It

Passengers in a small plane can usually hear all the radio conversations going on. My family has heard me talking to air traffic control, to small and big planes. My 6-year-old son Oliver was playing yesterday, and I saw him pick up a plane and say this:

Two-four-niner-golf requesting to land on runway one-seven . Two-four-niner-golf back-taxi on one-seven Two-four-niner-golf ready to takeoff on runway one-seven!

That was a surprisingly accurate representation of some communication a pilot might have (right down to the made-up tailnumber with the spelling alphabet!)

It just got more involved from there!

Jacob and Oliver love model train shows. I couldn t take them to one near us, but there was one in Joplin, MO. So the day before Easter, while Laura was working on her Easter sermon, two excited boys and I (frankly also excited) climbed into a plane and flew to Joplin.

We had a great time at the train show, discovered a restaurant specializing in various kinds of hot dogs (of course they both wanted to eat there), played in a park, explored the city, and they enjoyed the free cookies at the general aviation terminal building while I traded tips on fun places to fly with other pilots.

When it comes right down to it, the smiles of the people I fly with are the most beautiful thing in the air.

Jacob after his first father-son flight with me

Trusted Platform Modules are fairly unintelligent devices. They can do some crypto, but they don't have any ability to directly monitor the state of the system they're attached to. This is worked around by having each stage of the boot process "measure" state into registers (Platform Configuration Registers, or PCRs) in the TPM by taking the SHA1 of the next boot component and performing an extend operation. Extend works like this:

Trusted Platform Modules are fairly unintelligent devices. They can do some crypto, but they don't have any ability to directly monitor the state of the system they're attached to. This is worked around by having each stage of the boot process "measure" state into registers (Platform Configuration Registers, or PCRs) in the TPM by taking the SHA1 of the next boot component and performing an extend operation. Extend works like this: The last two major autopkgtest releases (3.18 from November, and 3.19 fresh from yesterday) bring some new features that are worth spreading.

New LXD virtualization backend

3.19 debuts the new adt-virt-lxd virtualization backend. In case you missed it, LXD is an API/CLI layer on top of LXC which introduces proper image management, seamlessly use images and containers on remote locations, intelligently caching them locally, automatically configure performant storage backends like zfs or btrfs, and just generally feels really clean and much simpler to use than the classic LXC.

Setting it up is not complicated at all. Install the

The last two major autopkgtest releases (3.18 from November, and 3.19 fresh from yesterday) bring some new features that are worth spreading.

New LXD virtualization backend

3.19 debuts the new adt-virt-lxd virtualization backend. In case you missed it, LXD is an API/CLI layer on top of LXC which introduces proper image management, seamlessly use images and containers on remote locations, intelligently caching them locally, automatically configure performant storage backends like zfs or btrfs, and just generally feels really clean and much simpler to use than the classic LXC.

Setting it up is not complicated at all. Install the lxd package (possibly from the backports PPA if you are on 14.04 LTS), and add your user to the lxd group. Then you can add the standard LXD image server with

lxc remote add lco https://images.linuxcontainers.org:8443and use the image to run e. g. the libpng test from the archive:

adt-run libpng --- lxd lco:ubuntu/trusty/i386 adt-run libpng --- lxd lco:debian/sid/amd64The adt-virt-lxd.1 manpage explains this in more detail, also how to use this to run tests in a container on a remote host (how cool is that!), and how to build local images with the usual autopkgtest customizations/optimizations using adt-build-lxd. I have btrfs running on my laptop, and LXD/autopkgtest automatically use that, so the performance really rocks. Kudos to St phane, Serge, Tycho, and the other LXD authors! The motivation for writing this was to make it possible to move our armhf testing into the cloud (which for $REASONS requires remote containers), but I now have a feeling that soon this will completely replace the existing adt-virt-lxc virt backend, as its much nicer to use. It is covered by the same regression tests as the LXC runner, and from the perspective of package tests that you run in it it should behave very similar to LXC. The one problem I m aware of is that

autopkgtest-reboot-prepare is broken, but hardly anything is using that yet. This is a bit complicated to fix, but I expect it will be in the next few weeks.

MaaS setup script

While most tests are not particularly sensitive about which kind of hardware/platform they run on, low-level software like the Linux kernel, GL libraries, X.org drivers, or Mir very much are. There is a plan for extending our automatic tests to real hardware for these packages, and being able to run autopkgtests on real iron is one important piece of that puzzle.

MaaS (Metal as a Service) provides just that it manages a set of machines and provides an API for installing, talking to, and releasing them. The new maas autopkgtest ssh setup script (for the adt-virt-ssh backend) brings together autopkgtest and real hardware. Once you have a MaaS setup, get your API key from the web UI, then you can run a test like this:

adt-run libpng --- ssh -s maas -- \

--acquire "arch=amd64 tags=touchscreen" -r wily \

http://my.maas.server/MAAS 123DEADBEEF:APIkey

The required arguments are the MaaS URL and the API key. Without any further options you will get any available machine installed with the default release. But usually you want to select a particular one by architecture and/or tags, and install a particular distro release, which you can do with the -r/--release and --acquire options.

Note that this is not wired into Ubuntu s production CI environment, but it will be.

Selectively using packages from -proposed

Up until a few weeks ago, autopkgtest runs in the CI environment were always seeing/using the entirety of -proposed. This often led to lockups where an application foo and one of its dependencies libbar got a new version in -proposed at the same time, and on test regressions it was not clear at all whose fault it was. This often led to perfectly good packages being stuck in -proposed for a long time, and a lot of manual investigation about root causes.

.

These days we are using a more fine-grained approach: A test run is now specific for a trigger , that is, the new package in -proposed (e. g. a new version of libbar) that caused the test (e. g. for foo ) to run. autopkgtest sets up apt pinning so that only the binary packages for the trigger come from -proposed, the rest from -release. This provides much better isolation between the mush of often hundreds of packages that get synced or uploaded every day.

This new behaviour is controlled by an extension of the --apt-pocket option. So you can say

adt-run --apt-pocket=proposed=src:foo,libbar1,libbar-data ...and then only the binaries from the

foo source, libbar1, and libbar-data will come from -proposed, everything else from -release.

Caveat:Unfortunately apt s pinning is rather limited. As soon as any of the explicitly listed packages depends on a package or version that is only available in -proposed, apt falls over and refuses the installation instead of taking the required dependencies from -proposed as well. In that case, adt-run falls back to the previous behaviour of using no pinning at all. (This unfortunately got worse with apt 1.1, bug report to be done). But it s still helpful in many cases that don t involve library transitions or other package sets that need to land in lockstep.

Unified testbed setup script